Oxla

Building Big Data Migrators for the World’s Fastest Distributed Database

Expertise

Custom Software Development

Platforms

Cloud

Industry

Data Processing

About Oxla

Oxla enables data processing, analytics, and storage that’s easily scalable, reliable, and simply easier to use than other OLAP solutions out there. Written from scratch, its mission is to use big data analytics and approach the speed of commercial database management systems while scaling to the size of your business.

The Challenge

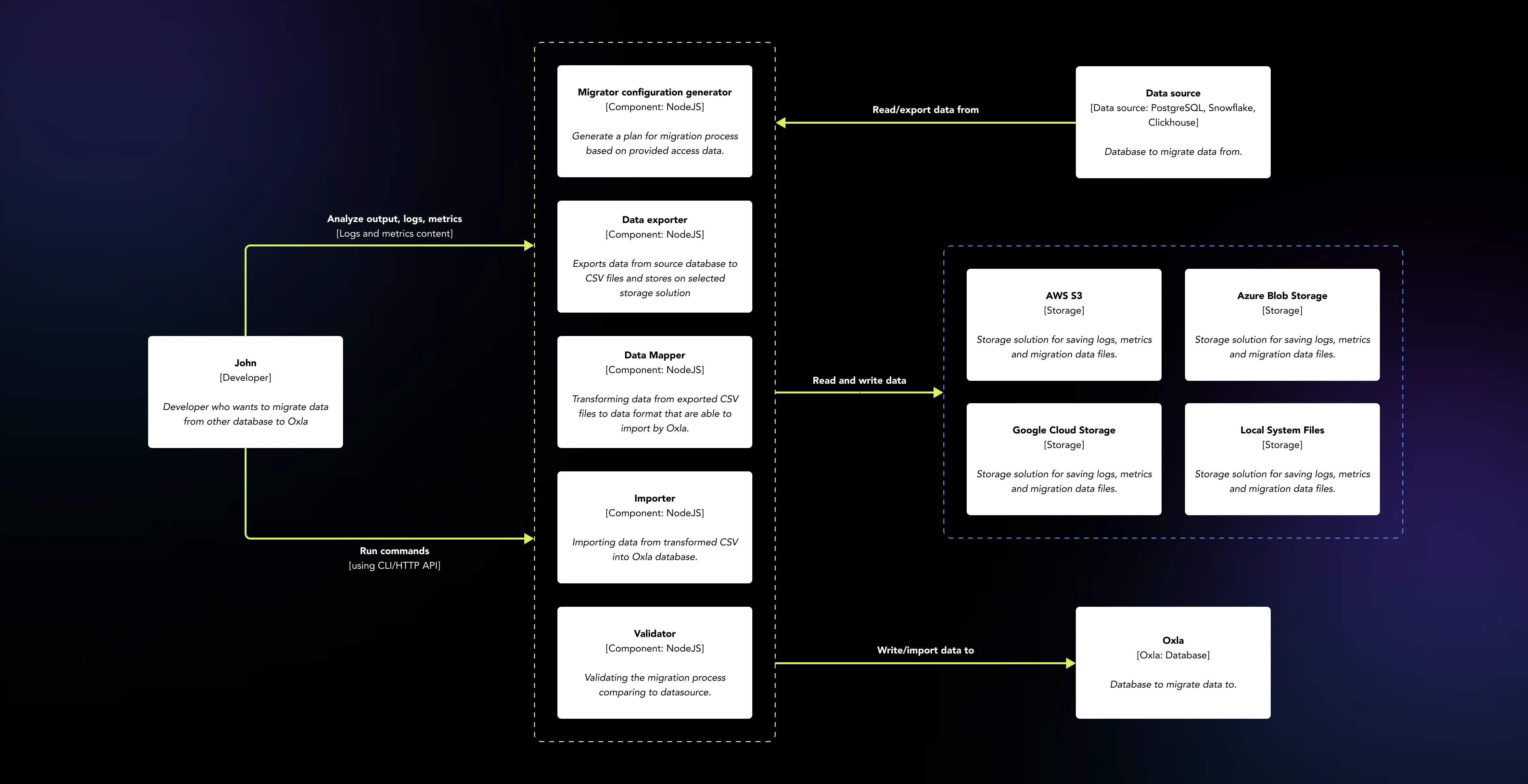

Wanting to further its name as the world's fastest distributed database, Oxla needed a new set of tools to ease out the data migration process from different types of databases, especially concerning Big Data. The EL Passion team was responsible for the development of the tools, taking into account the three most significant database solutions: PostgreSQL, Snowflake, and Clickhouse.

Oxla aims at combining the pros of three solutions: the simplicity of communication by similarities to the PostgreSQL, communication protocol and the possibility to store and process huge amounts of data like Snowflake and Clickhouse in the scalable cloud environment. And we helped them get there.

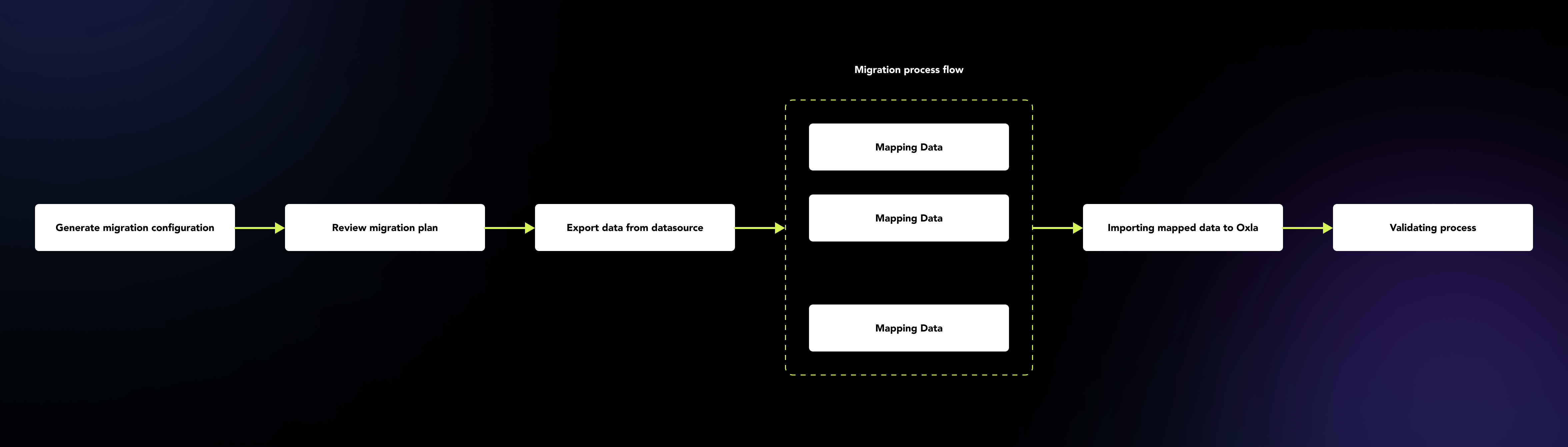

Data mapping supported by different database systems

The process of migration of vast amounts of data can be time-consuming, complicated, error-prone, and usually needs user supervision. The migrators we developed make the whole process more user-friendly by allowing the setup of a configuration for the migration in the first steps and then handling the process automatically for you.

Migrating various data types, from numbers or strings to more complicated ones like JSONs that are also from various database systems, needs a deep analysis of the edge cases that can cause problems. Unsupported data types or different ranges of values supported by different database systems are only some examples of them that the Oxla migrators needed to handle in a way that does not lose the value or the meaning of the data

Logs and metrics data at hand

Every tool can meet with cases unpredicted beforehand, especially in long-running processes that can take days or, in some cases, weeks. The Oxla migrators have a way of supporting the user in solving these problems as well.

Every running migration collects logs and metrics at every stage of the process so the user can detect and identify problems with their data migration. They are saved into SQLite files either after the finished migration or when it is stopped by the system, so no data is lost, no matter how big the process is.

Supporting big cloud storage solutions – AWS, Google Storage, Azure Blob Storage

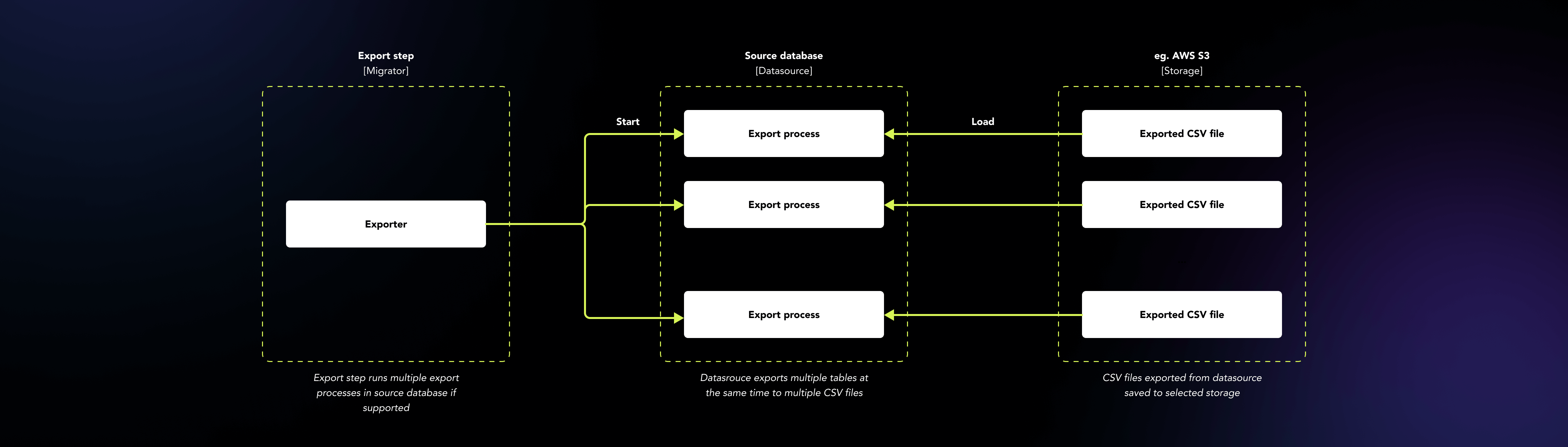

Oxla, as a distributed database, works well in the cloud environment. First of all, it is just hard to store a huge amount of data on your personal computer while migrating. To solve this problem, migrators work well with cloud storage solutions like AWS S3, Google Storage, and Azure Blob Storage. The migrators store the data in the selected cloud storage during the whole process in separate paths for each migration, together with logs and metrics. This way, after finishing the migration process with failure or success, we still have access to the artifacts of the process – data, logs, and metrics files.

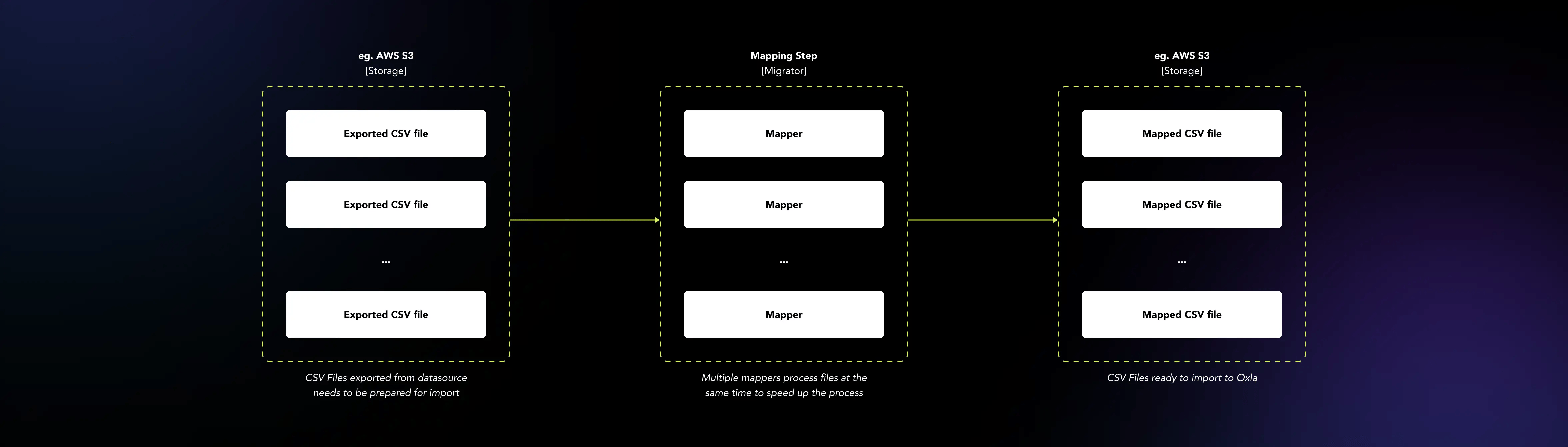

Improving the migration process with parallel and stream processing

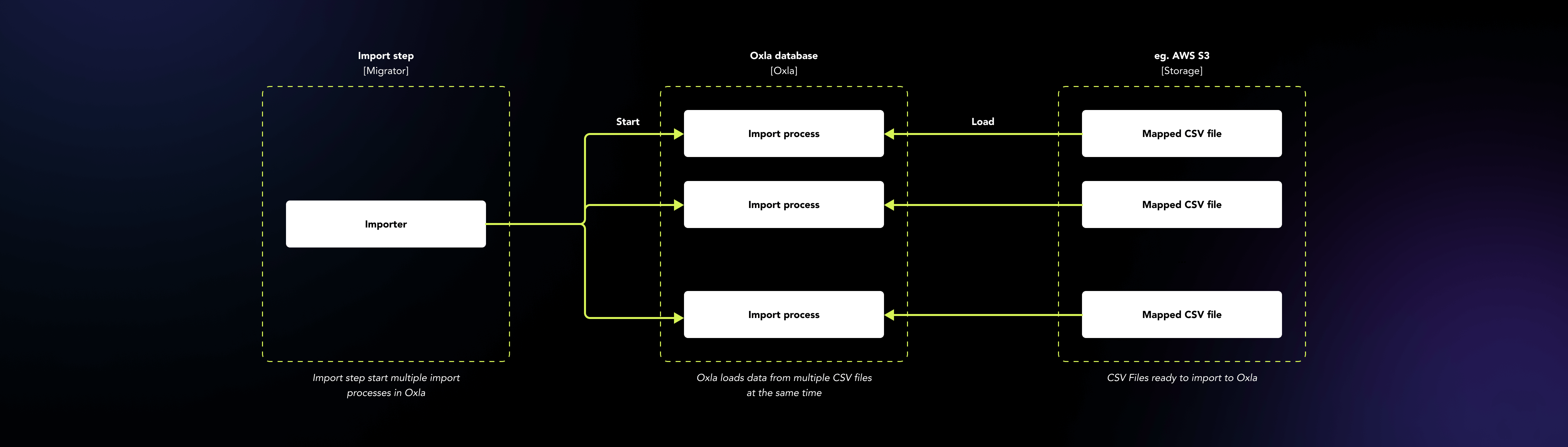

Processing huge amounts of data takes time, memory resources, and costs money. To reduce the assets needed for migration, we have improved the processing of data by implementing parallel and stream processing. The migrators process several tables or files at the same time using multiple cores on the machine. Additionally, using the streams for transferring data to and from the migrator, the memory required for the whole process is at the lowest level it can be.

Testing the migators’ performance on Big Data

Testing the application that acts directly with different types of databases is not an easy task. To make it more manageable, we prepared the data set generator that generates different kinds of data as CSV files that can be loaded into a selected database and used for testing the migration process. The selected database providers like Snowflake or Clickhouse offer the sample datasets that we also used to test the performance and quality of the migrators. To simulate the real user environment from the HTTP API perspective, we developed the Proof of Concept (POC) of the web application that presents the migrators as a SaaS application for the migration process from the selected database source to Oxla as a destination database.

Distribution and availability

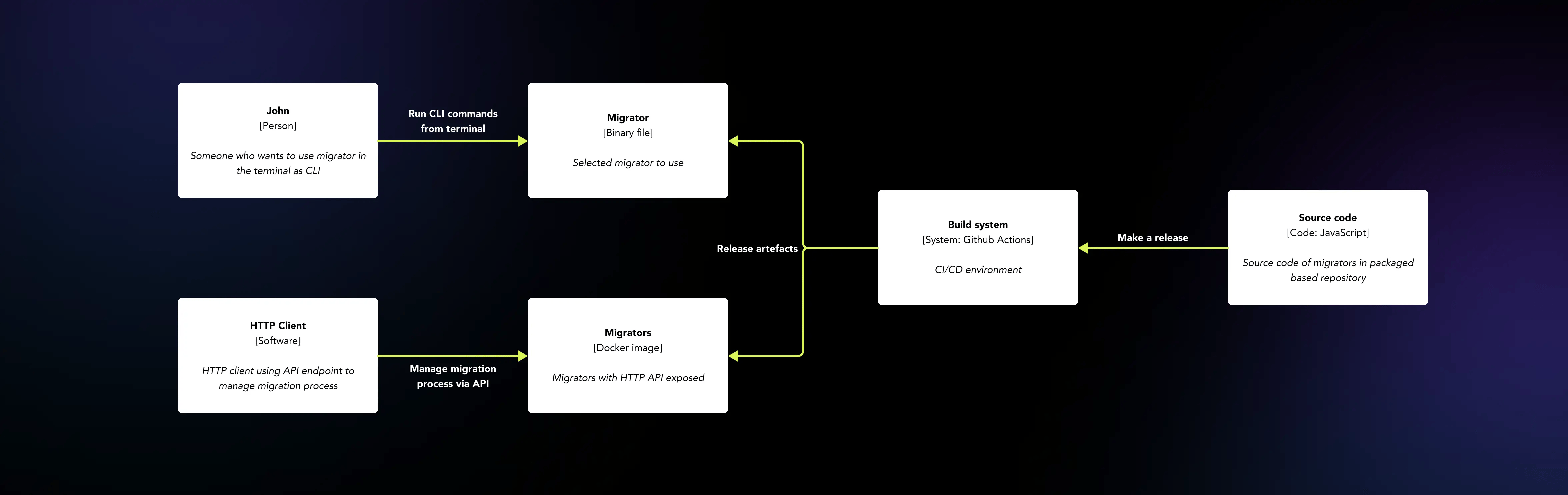

The distribution and availability of this kind of application are not the same as a web application. The migrators can be used as standalone CLI applications that can be run in the terminal on the Linux, macOS, Windows environment and as the services available by the HTTP API layer.

Every database should have its own migrator available to use for the users. To achieve both ways of distribution, we built an application to run in a NodeJs environment and organize the code as packages. This way, we can pack each migrator as an application with an embedded NodeJs environment and migrator code and distribute it as one binary executable file to be used as a standalone CLI application.

In addition, the same code is organized into modules and packages thanks to PNPM Workspaces, and Nx can be used while implementing the HTTP API layer without the need to write something else from scratch or maintain separate code for both ways of distribution. Moreover, the migrators are also distributed using Docker images with binary files inside an image so that it can be used in an isolated Docker environment in the same way as the CLI application. Everything is done automatically through configured Github Actions that act as a CI/CD solution supporting the build, test, and delivery processes.

Tools

-

![nest-js]()

NestJS

-

![nodejs@3x]()

NodeJS

-

![typescript@3x]()

TypeScript

-

![react]()

React

-

![docker@3x]()

Docker

-

![sqlite]()

SQLite

Deliverables

-

Binary executables - each one for every migrator type (Snowflake, PostgreSQL, Clickhouse)

-

Docker images with embedded binary migrator’s executable

-

HTTP API for migrators as a docker image

-

Integration with different cloud storage solutions: Google Cloud Storage, AWS S3 Storage and Azure Blob Storage

-

Detailed log and monitoring data stored using SQLite

-

Parallel processing of data files